Large language models (LLMs) like GPT-3 have demonstrated impressive natural language capabilities, but their inner workings remain poorly understood. This "black box" nature makes LLMs potentially problematic when deployed in sensitive real-world applications.

What is the LLM Black Box Problem?

Language Learning Models (LLMs) are powerful tools that rely on deep learning to process and analyse vast amounts of text. Today they're the brains behind everything from customer service chatbots to advanced research tools.

Yet, despite their utility, they operate as "black boxes," obscuring the logic behind their decisions. This opacity isn't just a tech puzzle; it's a problem with safety and ethical implications. If we don't know how these systems reach a conclusion, how we use them effectively and more importantly can we trust them with critical decisions like medical diagnoses or financial risk assessments?

Scale and Complexity of LLMs

LLMs contain massive numbers of parameters - GPT-3 has 175 billion parameters, while more recent models can have trillions. This enormous scale makes it nearly impossible to fully comprehend their internal logic. Each parameter interacts in complex ways with many others in the neural network architecture of LLMs. This entanglement means the overall behavior of the system cannot be easily reduced to discernible rules.

The intricacy is compounded as the scale increases. With trillions of parameters, LLMs exhibit emergent capabilities - abilities arising from the system as a whole that are not predictable by examining individual components. For instance, a capability like common sense reasoning emerges from exposure to massive datasets, not hard coding of rules.

The scale and complexity of LLMs lead to innate difficulties in interpreting how they process language or arrive at conclusions. Their decision-making appears opaque even to their own creators. This hinders diagnosing unwanted behaviors or biases the models exhibit. Reducing scale could improve interpretability but likely at the cost of capability. The scale itself enables behaviors impossible with smaller models. There are thus intrinsic tradeoffs between scale, capability, and interpretability.

Lack of Transparency by LLM Developers

Most major LLMs are developed by large tech companies like Google, Meta, and OpenAI. These models are proprietary systems whose complete details are not publicly revealed. The companies treat their LLMs as trade secrets, disclosing only limited information about model architecture, training data, and decision-making processes.

This opacity prevents independent auditing of the systems for biases, flaws, or ethical issues. Without transparency, it is impossible to verify if unsafe data is used in training or if the models exhibit unfair biases. The public has to simplistically trust the creators' assurances.

However, it has become clear even the creators do not fully understand how their models operate. They may comprehend the overall architecture but cannot explain the complex emergent behaviors that arise from vast scales. For example, OpenAI admitted their AI text detector tool did not reliably work weeks after touting its release. If the developers themselves cannot explain their LLMs' logic, truly auditing them becomes even more challenging.

The lack of transparency enables deployment of potentially problematic systems with limited accountability. It also inhibits identifying and addressing issues with LLMs. Demands for tech companies to open up their AI systems to scrutiny are growing. But incentives to maintain secrecy and proprietary advantages remain strong.

Consequences of the LLM Black Box Problem

The lack of interpretability in LLMs poses significant risks if the black box problem is not meaningfully addressed. Some potential adverse consequences include:

Flawed Decisions

The lack of transparency into LLM decision-making means any biases, errors or flaws in their judgment can go undetected and unchecked. Without visibility into their reasoning, there is limited ability to identify problems or inconsistencies in how LLMs analyze information and arrive at conclusions.

This presents significant risks when deploying LLMs in sensitive real-world applications like healthcare, finance and criminal justice. As black boxes, their decisions cannot be audited for soundness and ethicality. Incorrect, inappropriate or unethical choices could result without realizing it prior to negative impacts.

For example, a medical LLM could make diagnosis recommendations based partly on outdated information or unchecked biases that were embedded in training data. Without transparency, its reasoning cannot be validated as medically sound versus discriminatory. Similarly, a hiring LLM may discount qualified candidates due to gender if errors in judgment logic go uncorrected as a black box system.

Real world testing alone is insufficient to catch all flaws without internal visibility. Proactively solving the black box problem is critical to preventing rollout of LLMs that codify bias and faulty reasoning leading to real harms. Explainability is key to auditing the integrity of LLM decision-making before high-stakes deployment.

Difficulty Diagnosing Errors

When LLMs make inaccurate or wrong predictions, the lack of transparency into their decision-making presents obstacles to identifying the root cause. Without visibility into the model logic and processing, engineers have limited ability to diagnose where and why the systems are failing.

Pinpointing whether bad training data, flaws in the model architecture, or other factors are responsible for errors becomes a black box puzzle. LLMs may reproduce harmful biases or make irrational conclusions that go undetected until after deployment. The root causes underlying these failures cannot be determined without deciphering the model's reasoning.

For example, a hiring algorithm may inadvertently discriminate against certain candidates. But auditing the system's code reveals little without grasping how the LLM ultimately weighs different features and makes judgments. Without diagnosing the source of problems, they cannot be reliably fixed or prevented from recurring.

The difficulty of probing LLMs also hinders validating that the systems are working as intended before real-world release. More rigorous testing methodologies are needed to stimulate edge cases and diagnose responses without internal visibility. Solving the black box problem is key to enabling proper error analysis and correction.

Constrained Adaptability

The opacity of LLMs also restricts their ability to be adapted for diverse tasks and contexts. Users and developers have limited visibility into the models' components and weighting to make targeted adjustments based on applications.

For example, a hiring LLM may be ineffective at evaluating candidates for an engineering role because it cannot de-emphasize academic credentials versus demonstrated skills. Without model transparency, it is difficult to re-tune components to improve adaptability across domains.

Similarly, a medical LLM may falter in rare disease diagnosis because the data imbalances in training obscured those edge cases. But the black box nature makes it challenging to re-calibrate the model's diagnostic weighting and reasoning for specialized tasks.

LLMs often fail or underperform when applied to new problems because they cannot be fine-tuned without visibility into their inner workings. Users cannot easily discern how to tweak the systems for their particular needs. Solving the black box problem would enable superior adaptation across diverse real-world situations and use cases.

Concerns Over Bias and Knowledge Gaps

While LLMs may have access to enormous training data, how they process and utilize that information depends on their model architectures and algorithms. Their knowledge is static, locked at the time of training. But their reasoning can demonstrate dynamic and unpredictable biases based on those architectural factors.

For example, a medical LLM could exhibit demographic biases if trained on datasets containing imbalanced representation. But auditing its knowledge limitations requires going beyond the training data itself to examine how it is weighed by the model.

Similarly, an LLM's knowledge on niche topics could be deficient if the pre-training failed to cover those domains. But it may incorrectly gain overconfidence in generating speculative text on those topics unchecked. This speculation raises risks of generating factual hallucinations outside its actual knowledge.

So while LLMs contain extensive knowledge, how they apply it relies on opaque processing mechanics. Simply adding more training data does not necessarily address gaps or biases that emerge from the black box system logic. Model reasoning itself, not just model knowledge, needs transparency to evaluate LLMs meaningfully.

Solving the black box problem provides fuller insight into when LLMs are operating reliably versus speculatively outside core knowledge areas. Their information is only as useful as their processors.

Legal Liability

The black box nature of LLMs also creates uncertain legal liability if the systems were to cause harm. If an LLM made a decision or recommendation that led to detrimental real-world impacts, its opacity would make it difficult to ascertain accountability.

Without transparency into the model's reasoning and data handling, companies deploying LLMs could evade responsibility by obfuscating how their systems work. Proving misuse or negligence becomes challenging when internal processes are inscrutable.

For example, if a medical LLM provided faulty treatment advice leading to patient harm, the opaque logic would hinder investigating if the LLM acted negligently or against regulations. Those impacted may have limited legal recourse without clearer model explainability.

This legal gray zone increases risks for companies deploying LLMs in sensitive areas like health, finance, and security. They may face lawsuits, restrictions or even bans if they cannot explain their LLMs' decision-making and show diligence. Solving the black box problem is critical for establishing proper accountability and governance frameworks through model transparency.

Reduced Trustworthiness

The black box opacity of LLMs makes it impossible to externally validate whether they are operating fairly and ethically, especially in sensitive contexts. For applications like healthcare, finance, and hiring, lack of transparency prevents ascertaining if the systems harbor problematic biases or make decisions based on unfair criteria.

Without visibility into internal model reasoning, users have limited ability to audit algorithms that could profoundly impact people's lives. Verifying criteria like gender neutrality and racial fairness is inhibited when examining inputs and outputs alone. Vetting LLMs as unbiased requires understanding their decision-making processes.

This reduced trustworthiness presents challenges for integrating LLMs into functions with significant real-world consequences. Regulators likely will not permit widespread deployment of LLMs in high-stakes domains if their judgments cannot be examined for integrity. Methods to enable third-party audits of biases and equity in LLMs are needed to build confidence in their applicability.

Solving the black box problem is essential for enabling accountability around ethics and fairness. Users cannot reasonably trust in LLMs operating as black-box systems. Transparency and explainability will be critical for unlocking beneficial and responsible applications.

Degraded User Experiences

The lack of transparency into LLM decision-making also diminishes the quality of user experiences and interactions. When users do not understand how an LLM works under the hood, they cannot craft prompts and inputs more effectively to arrive at desired outputs.

For example, a legal LLM may fail to provide useful insights if queried with imprecise terminology. But users cannot refine inputs without knowledge of how the model parses language. They are left guessing how to prompt it through trial and error.

Similarly, a creative writing LLM may generate text lacking proper continuity if users cannot guide its processing by weighting keywords or themes in prompts. Without visibility into operative logic, users cannot shape interactions for coherent outputs.

Opaque LLMs force users to treat systems as oracles producing hit-or-miss results. But model transparency could empower users to interact with them more productively. Solving the black box problem provides users agency rather than randomness in leveraging LLMs to their full potential across applications.

Risk of Abusing Private Data

LLMs require massive amounts of training data, including datasets containing private personal information. However, the black box opacity makes it impossible to verify how this sensitive data is being utilized internally. Without transparency into data handling processes, there are risks of exploiting or misusing personal information improperly.

For example, medical LLMs trained on patient health records could potentially expose that data or make determinations about people based on factors like demographics rather than sound medical analysis. But auditing how the LLM uses sensitive training data is inhibited by the black box problem.

Similarly, LLMs deployed in hiring, lending, or housing could abuse factors like race, gender, or ethnicity if unfair biases become embedded during opaque training processes. There is little recourse if people's personal data is being exploited or misused without explainability.

Requirements like data masking, selective model access to data fields, and internal auditing mechanisms are likely needed to reduce risks of misappropriating private data. But implementing safeguards remains a challenge when LLMs processes are not sufficiently interpretable. Solving the black box problem is key to securing personal data used to develop powerful AI systems.

Unethical Uses

The opacity of LLMs creates risks of enabling unethical applications without accountability. For example, deploying LLMs for mass surveillance could infringe on privacy rights if their internal processes are not transparent. LLMs could be secretly analyzing private data and communications without explainability into their activities.

In addition, the lack of transparency facilitates development of systems aimed at potentially unethical persuasion or influence. Manipulative LLMs could be designed to covertly sway thinking in undesirable ways that evade detection if their inner workings remain hidden.

The absence of oversight into LLM decision-making provides cover for misuse in systems that impact human lives. Companies or governments can claim systems operate ethically while obfuscating processes that infringe on rights and values.

Without solving the black box problem, there are limited technical means to prevent misappropriation of LLMs that violate norms. Ethical implementation requires transparency to verify alignment with human principles in impactful applications. Opaque LLMs enable unchecked harms.

These consequences can further lead to:

- Loss of public trust: Widespread use of inscrutable black box models could undermine public confidence and acceptance of AI systems.

- Stifled innovation: Authorities may limit applications of LLMs they perceive as untrustworthy, depriving society of potential benefits.

- Privacy violations: Lack of safeguards into how private data is used during training could lead to exploitation without explainability requirements.

- Limited oversight: The absence of transparency hinders effective governance and oversight to ensure ethical and safe LLM deployment.

Solving the black box problem is critical to unlocking the full potential of LLMs. If their thinking remains opaque, the risks likely outweigh the benefits for many applications. Increased explainability is key to preventing undesirable consequences.

Potential Solutions to the LLM Black Box Problem

While the LLM black box problem poses formidable challenges, viable techniques are emerging to enhance model transparency and explainability. By rethinking model architectures, training processes, and inference methods, LLMs could become interpretable without sacrificing capabilities.

Promising approaches include glass-box neural networks, integration of knowledge graphs, and explainable AI techniques. Though difficult obstacles remain, steady progress is being made towards acceptable solutions. The path forward lies in cross-disciplinary collaboration to usher in transparent yet powerful LLMs fit for broad deployment. With rigorous research and responsible innovation, the black box can be cracked open.

“Glass-Box” Architectures

One approach to mitigate the black box problem is developing more transparent "glass-box" model architectures and training processes. This involves designing LLMs to enable better visibility into their internal data representations and reasoning.

Techniques like attention mechanisms and modular model structures can help surface how information flows through layers of the neural network. Visualization tools could illustrate how different inputs activate certain portions of the model.

In addition, augmented training techniques like adversarial learning and contrastive examples can probe the model’s decision boundaries. Analyzing when the LLM succeeds or fails on these special training samples provides insights into its reasoning process.

Recording the step-by-step activation states across layers during inference can also shed light on how the model performs logical computations. This could identify signals used for particular tasks.

Overall, constructing LLMs with more explainable components and training regimes can improve model transparency. Users could better audit system behaviors, diagnose flaws, and provide corrective feedback for glass-box models. This enables LLMs to be scrutinized and refined continually for fairer performance.

Knowledge Graphs

Incorporating structured knowledge graphs into LLMs is another promising approach to mitigate the black box problem. Knowledge graphs contain factual information in a networked, semantic form that could bolster model reasoning in an explainable way.

Encoding curated knowledge into LLMs can reduce reliance on purely statistical patterns from training data that contribute to opacity. For example, medical LLMs could leverage knowledge graphs of ontological biomedical data as an additional transparent layer of structured reasoning about diseases, symptoms, treatments, etc.

During inference, LLMs could reference knowledge graphs to retrieve relevant facts and relationships to inform and explain conclusions. This supplements the intrinsic pattern recognition of neural networks with a grounded knowledge framework.

LLMs trained jointly with knowledge graphs may better articulate their factual reasoning and credibility for given predictions. This could enable identifying gaps or misconceptions in their knowledge through auditing the knowledge graph contents.

Incorporating structured, human-understandable knowledge can thus augment model decision-making with explainable components. This promotes transparency while enhancing capabilities in domain-specific applications.

Explainable AI

Research into explainable AI (XAI) aims to develop techniques that describe and visualize how complex models like LLMs arrive at particular outputs or decisions. Approaches such as saliency mapping and rule extraction help attribute model results back to input features and learned relationships.

For example, saliency mapping highlights the most influential input words that activated certain model logic for generating text. This technique can reveal if inappropriate correlations were learned during training.

Meanwhile, rule extraction attempts to distill patterns in the model’s statistical connections into simple IF-THEN rules that users can interpret. This elicits fairer rules the model may be implicitly following.

Interactive visualization tools are also emerging to enable easier debugging of model internal states in response to test inputs. Users can tweak inputs and observe effects on downstream logic and outputs.

Overall, XAI techniques can effectively expose model reasoning in ways familiar to humans. But challenges remain in scaling explanations across LLMs’ vast parameter spaces. Integrating XAI into model training may yield models that are inherently self-explaining by design.

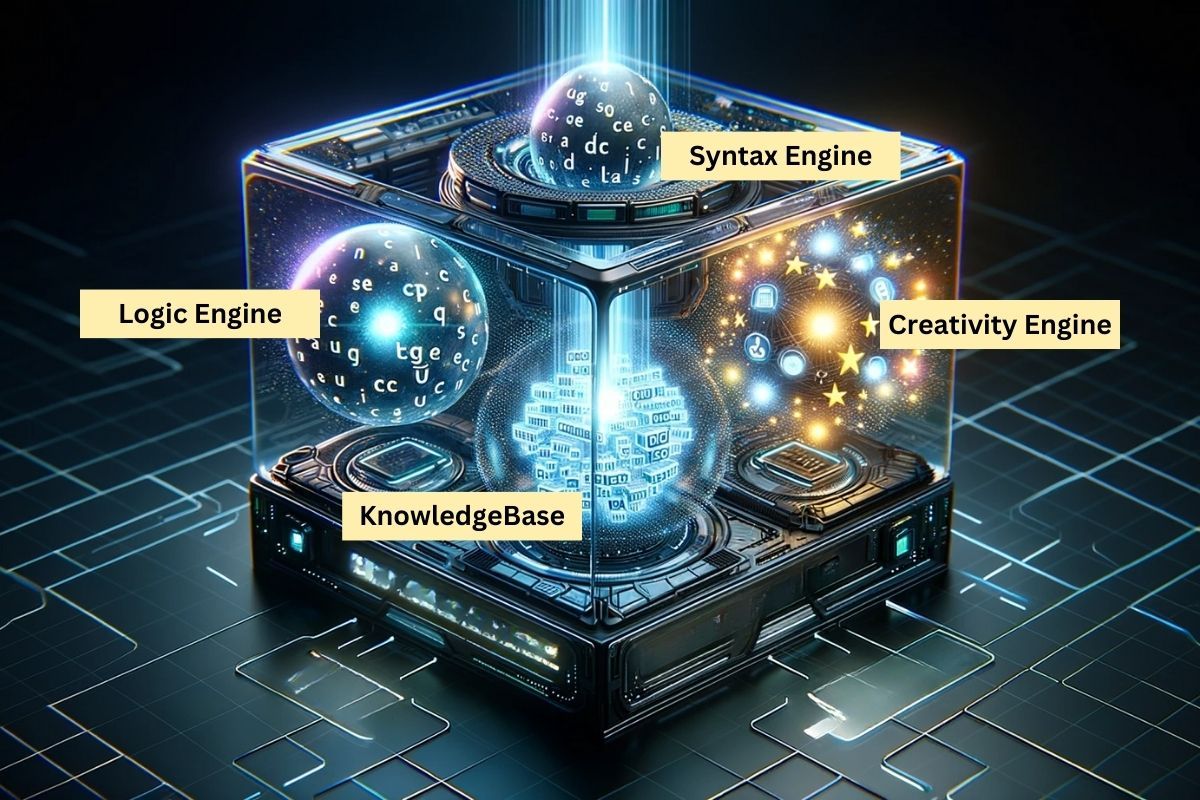

The LITE Box LLM Framework

One approach we've developed at the Prompt Engineering Institute for creating more interpretable LLMs is using a transparent processing framework called the Linguistic Insight Transparency Engine (LITE) Box.

This breaks down the LLM into distinct reasoning components:

Syntax Engine: The Syntax Engine handles structural and grammatical analysis of text. It focuses on tasks like parsing input, correcting errors, organizing coherent sections, and extracting semantics. This engine provides a robust linguistic foundation for the LLM's operations.

Creativity Engine: This engine drives creative aspects like idea generation, narrative writing, and stylistic formulation. It adds flair and depth through techniques like metaphor, descriptive language, and viewpoint exploration. The Creativity Engine injects originality into the LLM's output.

Logic Engine: The Logic Engine analyzes context and intent to evaluate relevance and accuracy. It performs deductive reasoning to check claims, assess arguments, and ensure factual reliability. This promotes logical consistency in the LLM's conclusions.

Knowledgebase: The Knowledgebase contains the world facts and information accumulated during training. The Logic Engine leverages this repository to ground LLM responses in empirical evidence. Separating storage from processing aids transparency.

The compartmentalized LITE Box framework provides interpretability into the distinct reasoning faculties. Users can better understand the LLM's approach by analyzing the interactions between these transparent components. This granular visibility enables a truly explainable LLM.

The transparent LITE Box design mitigates blackbox opacity issues. By separating language processing into explainable modules, users can better diagnose flaws and fine-tune performance. Prompt engineers also benefit from understanding how syntactic, logical, and creative components interact to shape output.

This visibility enables selecting the optimal LLM architecture for specific tasks. It also allows strategic prompting to leverage different reasoning engines. Targeted activation of creativity vs logic modules can yield superior results. Overall, the LITE Box's granular transparency unlocks prompt engineering feats not possible with monolithic blackbox LLMs.

The LITE Box framework represents one promising direction to enable interpretable LLMs. I will present more details on this modular architecture in a future, more comprehensive article. By demystifying internal processes, such transparent frameworks can realize explainable yet capable LLMs that earn user trust.