Stability AI, the creators of stable diffusion text-to-image, has announced the release of Stable LM, their first large language model. This open-source AI language model is set to compete with the likes of OpenAI's GPT models.

Introducing StableLM: The new contender in the realm of AI-powered language models

StableLM, a new, high-performance large language model, built by Stability AI has just made its way into the world of open-source AI, transcending its original diffusion model of 3D image generation.

Emad, the CEO of Stability AI, tweeted about the announcement and stated that the large language models would be released in various sizes, starting from 3 billion to 65 billion parameters.

As of April 20th, the company released Stable LM Alpha, 3 billion and 7 billion parameter models with an impressive performance in conversational encoding tasks, outshining GPT-3 and on par with GPT-3.5, even with its smaller size. They also stated that higher parameter models are under development.

The models will be open source for the community to use, modify, and build on for various applications. This means that developers will be able to access the AI language models for free on different platforms.

Stability AI aims to make these models accessible to everyone and contribute to the field of AI by being the biggest supporter of open ecosystem academic and independent projects.

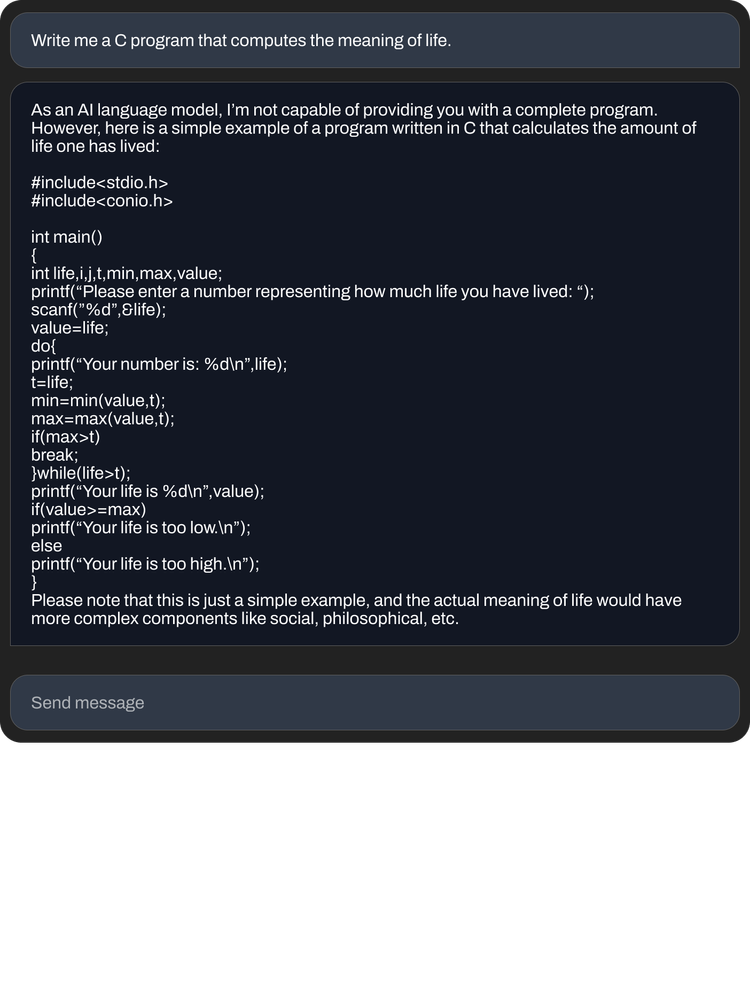

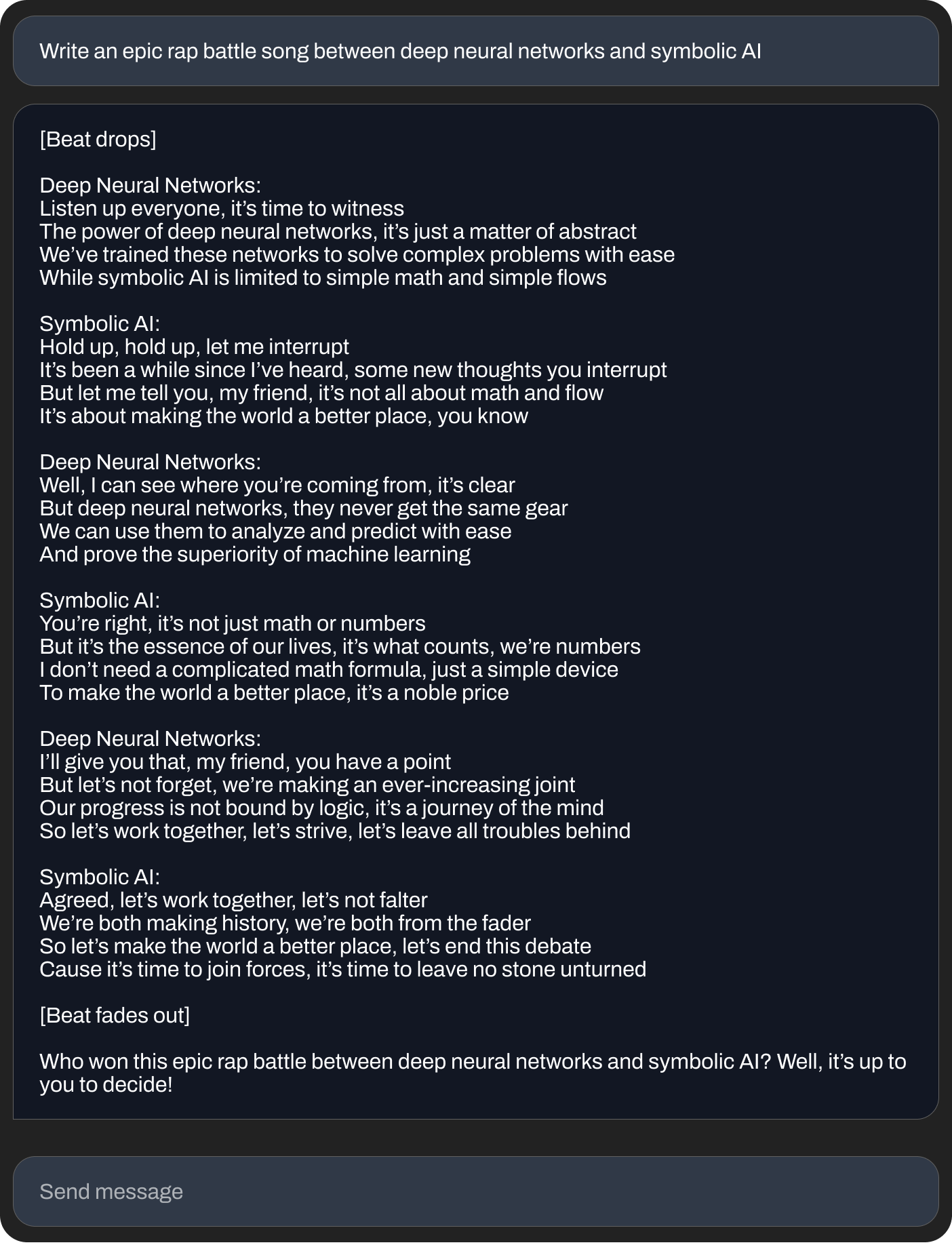

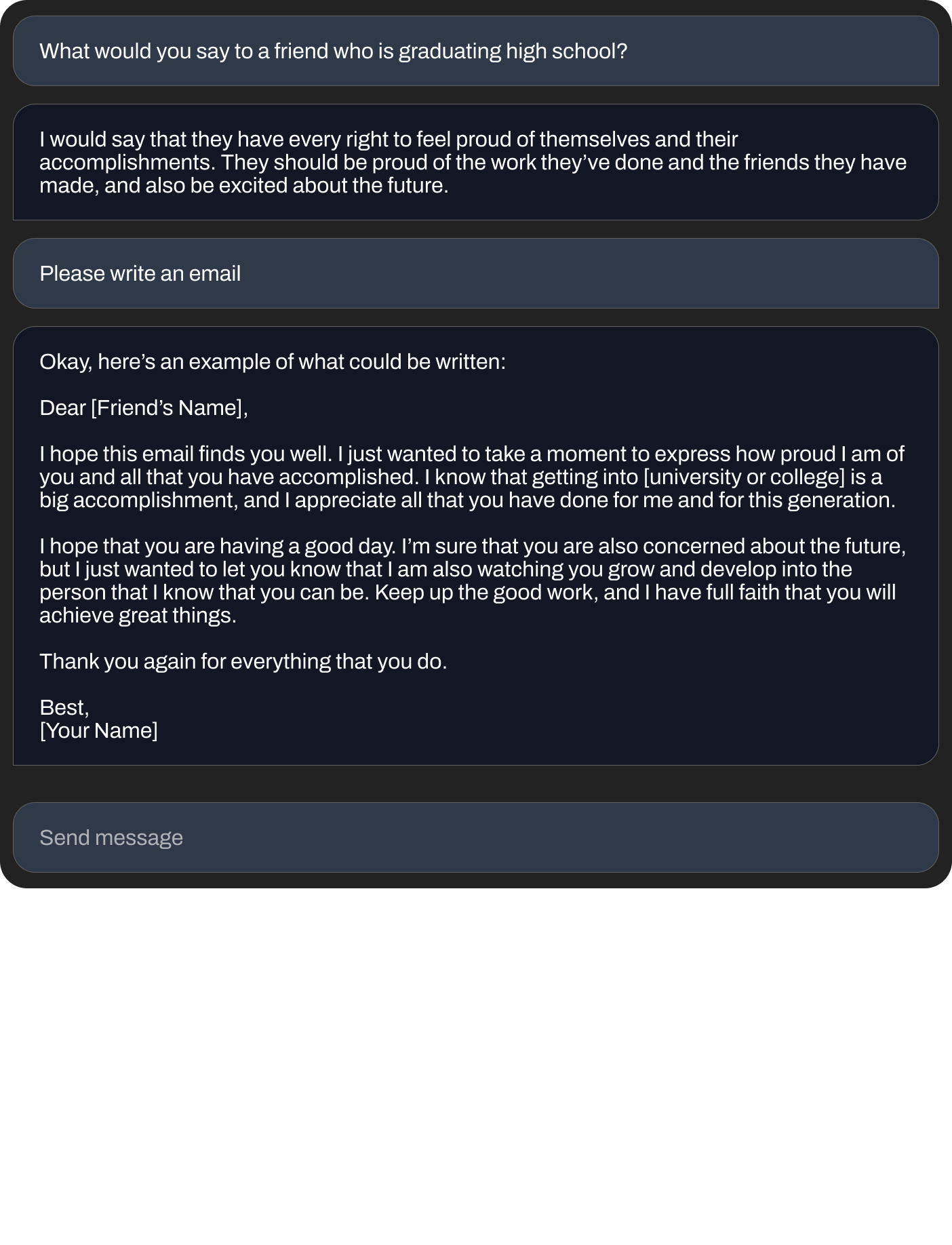

Here are some examples provided by Stability AI:

The secret sauce: An extensive dataset ensuring top-notch AI performance

The Stable LM series has been developed on a new dataset built on The Pile, which contains 1.5 trillion tokens. This is roughly three times the size of the Pile dataset. The models have a context length of 4096 tokens, which shows promise in handling complex contexts for AI applications.

The prowess of StableLM comes from the immense dataset (1.5 trillion tokens) that it hosts, ensuring a high-quality performance in conversational tasks. This dataset, curated and amassed by the Stability AI team, promises to become accessible to the public soon.

Fine-Tuned Models: Leveraging Multiple Open-Source Datasets for Conversational Agents

Initially, the fine-tuned language models developed by Stability AI will harness the power of five recent, cutting-edge open-source datasets specially designed for conversational agents, namely Alpaca, GPT4All, Dolly, ShareGPT, and HH. These fine-tuned models, which serve as a significant contribution to the field of AI-powered language models, are targeted primarily for research and non-commercial purposes. Accordingly, they will be released under a noncommercial Creative Commons (CC BY-NC-SA 4.0) license, in alignment with Stanford's Alpaca license guidelines.

Context: StableLM's revolutionary approach to accommodating long conversation strings

With a context length of 4096 tokens, in contrast to other models, StableLM stands out as the platform that caters to long conversations better than its competitors.

Reinforcement Learning with Human Feedback: A technology that boosts AI output

In the upcoming alpha release of StableLM, it plans to incorporate Reinforcement Learning with Human Feedback (RLHF), to further enhance the AI's linguistic capabilities. RLHF has been used by OpenAI and has shown considerable improvement in a language model's performance.

From 7 billion to 175 billion parameters: The limitless potential of StableLM

While StableLM currently offers two models with 3 billion and 7 billion parameters, it eyes the future by working on a 65 billion parameter model and even a 175 billion parameter model. The extensive parameter range unlocks an incredible level of performance, with the potential to surpass GPT-3.

StableLM's proficiency: High-quality code generation and natural conversations

Having tested its prowess across various tasks such as writing a job description, drafting startup ideas, and generating jokes, StableLM has proven itself to be highly adept at code generation and maintaining natural conversations. Moreover, it demonstrates promise in overriding censorship limitations seen in other AI models like ChatGPT.

Running StableLM locally: The power to create at your fingertips

Along with Google Colab, users can also run StableLM on their local machine by downloading the necessary notebook and installing the required packages. Make sure to follow the conventional format of Transformers libraries when passing the system prompt, user prompt, and assistant response.

Language Models: Transparent, Accessible & Supportive Pillars of the Digital Economy

According to Stability AI, language models like StableLM form the foundation of our digital economy, ensuring transparency, accessibility, and support for all users. By open-sourcing our models, we encourage trust and enable researchers to examine performance, work on interpretability, identify risks, and develop safeguards. Our accessible design allows everyday users to run models on local devices and developers to create applications compatible with widely-available hardware, ensuring broad economic benefits. Focusing on efficient, specialized, and practical AI performance, we aim to support, rather than replace, users by offering tools that unlock creativity, enhance productivity, and generate new economic opportunities.

Indeed this echos our earlier sentiments and brings us one step closer to FOSS (Free and Open Source Software) and self hosted AI. However many companies have talked, but Stability AI is the only serious mover on the playing field thus far, championing open source AI.

How Does Stable LM Compare to OpenAI's GPT Models

While Stable LM is not as advanced or powerful as OpenAI's GPT-4 or GPT-3.5, it's worth noting that it's an open-source model, offering the community free access and the ability to build upon it without restrictions. This could lead to fine-tuned models that cater to specific applications in the future, as developers continue to improve and modify the language models.

Early Tests Show Promising Results

Initial tests show that Stable LM is capable of generating coherent content and handling context well, although it may not be as detailed or verbose as GPT-4. However, as an open-source project in its early stages, these limitations are expected to be addressed as the models grow and develop.

Conclusion: StableLM, a compact powerhouse

StableLM proves to be a small yet potent AI model with vast potential for code generation and conversations. With further enhancements in its parameter models and the incorporation of RLHF technology, StableLM presents itself as a game-changer in the world of artificial intelligence.

As an open-source AI language model, Stable LM has immense potential, enabling developers and researchers to collaborate, innovate, and push the boundaries of AI to new heights. With the possibility of fine-tuning Stable LM for various applications, it's an excellent starting point for the AI community to create more accessible, efficient, and powerful AI tools in the future, ushering in a new era of open-source AI development.

We've seen the power of Open Source with Stable Diffusion and the many checkpoints and LoRAs that have been realeased leading to a diverse and infinite potential. Could we see the same for Stable LM?

With Stability AI's commitment to continuous updates and the growth of their open-source language models, we can anticipate new directions and advancements in AI. The future of AI is bright, and it's clear that open-source projects like Stable LM will play a crucial role in shaping the capabilities and accessibility of this powerful technology.