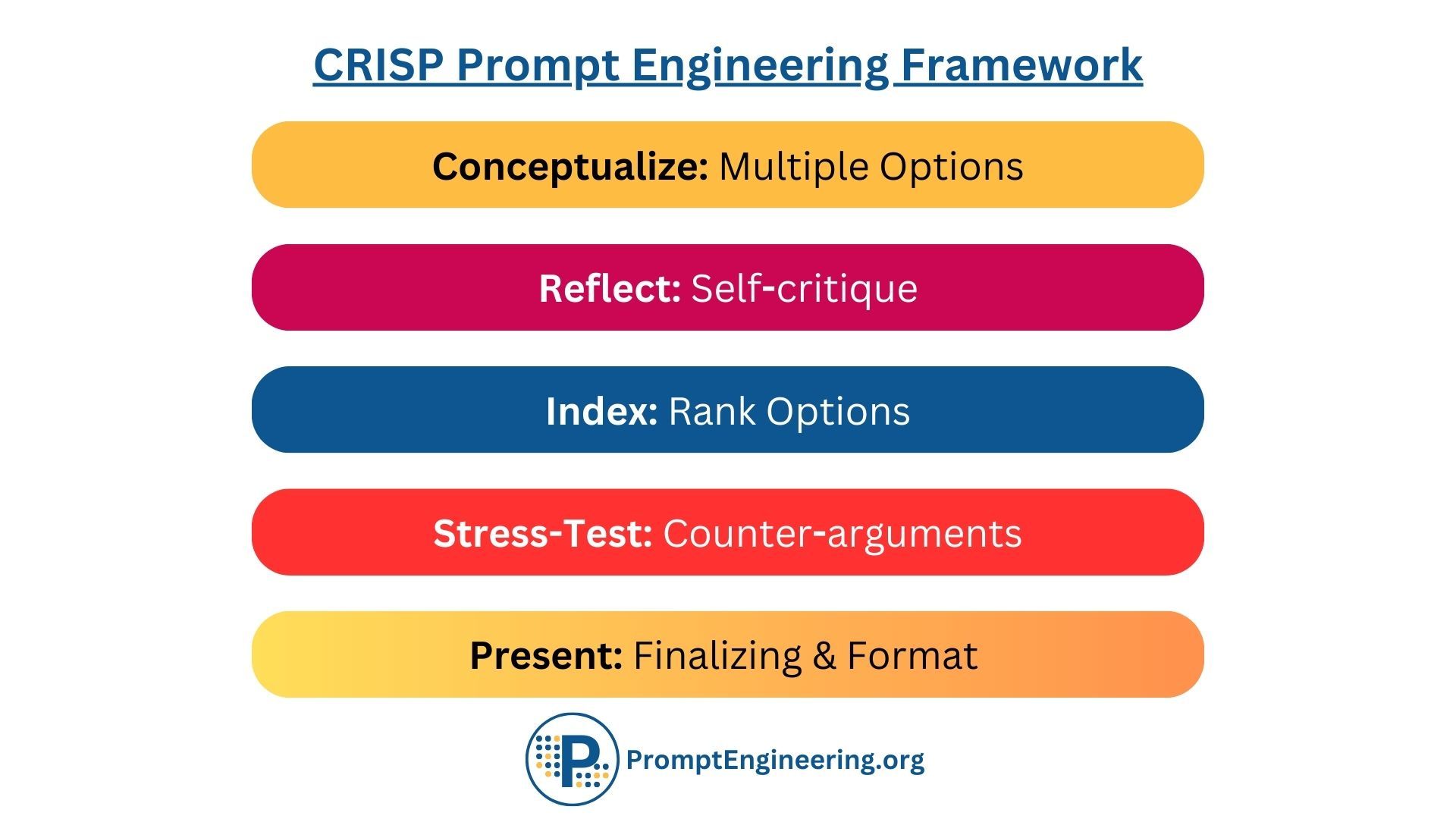

Let's take a look at the Plan-and-Solve paper, something I've been meaning to explore in-depth. Sure, its concepts have been rolled into our CRISP prompting framework, but there's more to unpack here.

In this article, we're going to break down what the framework is all about and why it's cool. The academic world is great at coming up with these ideas, but often they don't tell us how to actually use them in real life. That's where we come in. Towards the end, we'll shift gears to see how we can apply all this theory to practical prompting strategies.

Key Takeaway

The paper introduces Plan-and-Solve (PS) Prompting, a novel approach for improving the reasoning capabilities of Large Language Models (LLMs) in zero-shot scenarios. PS Prompting enhances LLMs' ability to tackle multi-step reasoning tasks by planning and dividing tasks into subtasks, leading to fewer calculation and missing-step errors, and shows promising results compared to existing few-shot and zero-shot approaches.

Summary

- Background and Problem: LLMs, while effective in various NLP tasks, struggle with multi-step reasoning tasks. Existing methods like few-shot CoT prompting and Zero-shot-CoT have limitations, such as manual effort and errors like calculation, missing-step, and semantic misunderstanding.

- Plan-and-Solve Prompting: This method has two steps - planning and execution. It prompts LLMs to first devise a plan for subtasks and then execute them. This method aims to reduce errors seen in Zero-shot-CoT.

- PS+ Prompting: An extension of PS Prompting, this involves more detailed instructions for variable extraction and calculation, further improving reasoning quality.

- Experimental Setup and Datasets: The approach was tested on ten datasets, including arithmetic, commonsense, and symbolic reasoning problems, using the GPT-3 model.

- Results:

- In arithmetic reasoning, PS+ prompting outperforms Zero-shot-CoT and is comparable to few-shot methods.

- In commonsense reasoning, PS+ prompting is superior to Zero-shot-CoT.

- In symbolic reasoning, PS+ prompting excels in certain datasets over Zero-shot-CoT and is competitive with Manual-CoT.

- Error Analysis: PS+ prompting reduces calculation and missing-step errors compared to Zero-shot-CoT.

- Self-Consistency Evaluation: Applying self-consistency (SC) to PS+ prompting improves its performance, as shown in GSM8K and SVAMP datasets.

- Impact of Prompts: The effectiveness of PS+ prompting varies with the prompt's detail level, indicating the sensitivity of LLMs to prompt structure.

- Limitations:

- Designing effective prompts requires effort and careful consideration.

- PS Prompting reduces calculation and missing-step errors but does not significantly address semantic misunderstanding errors.

- Ethical Considerations: The research uses publicly available datasets, and the proposed prompts do not collect personal information or discriminate against individuals or groups.

The paper demonstrates that PS+ prompting is a promising approach for enhancing the reasoning capabilities of LLMs in a zero-shot manner, potentially reducing the need for manual example crafting in few-shot learning scenarios.

Plan-and-Solve Prompting

The paper introduces the. concept of Plan-and-Solve (PS) Prompting is a novel approach designed to enhance the reasoning capabilities of Large Language Models (LLMs) in zero-shot learning scenarios.

It addresses specific issues encountered in multi-step reasoning tasks, particularly those that arise in zero-shot Chain-of-Thought (CoT) prompting. Let's delve into the details of this method:

Understanding Plan-and-Solve Prompting

1. Two-Step Process: Planning and Execution

- Planning Phase: In this initial phase, the LLM is prompted to devise a plan by breaking down the larger task into smaller, more manageable subtasks. This involves identifying key elements or steps necessary to solve the problem.

- Execution Phase: Following the plan, the LLM executes each subtask in the devised sequence. This step-by-step approach allows the model to tackle complex problems in a structured and logical manner.

2. Objective

- The primary goal of PS Prompting is to mitigate common errors encountered in Zero-shot-CoT, such as calculation errors, missing steps in reasoning, and semantic misunderstandings.

Examples and Applications

Example 1: Arithmetic Reasoning Problem

- Problem: "A farmer has 45 apples and distributes them equally among 9 baskets. How many apples are in each basket?"

- Planning Phase: The LLM identifies the key steps:

- Determine the total number of apples.

- Identify the number of baskets.

- Plan to divide the total number of apples by the number of baskets.

- Execution Phase: The LLM carries out the calculation: 45 apples ÷ 9 baskets = 5 apples per basket.

Example 2: Commonsense Reasoning Problem

- Problem: "If it starts raining at a picnic, what should be done with the food?"

- Planning Phase: The model plans to:

- Identify the immediate action required when it starts raining (protecting the food).

- Suggest possible solutions like covering the food or moving it to a sheltered area.

- Execution Phase: The LLM suggests, "Cover the food with a waterproof cloth or move it under a tree or into a vehicle to keep it dry."

Advantages and Impact

- Structured Problem-Solving: By dividing tasks into subtasks, PS Prompting fosters a more organized and systematic approach to problem-solving.

- Reduction in Errors: This method specifically targets the reduction of calculation and reasoning errors by ensuring each step is logically planned and executed.

- Versatility: PS Prompting can be applied to various domains, from mathematical problems to situational reasoning in commonsense questions.

- Enhanced Reasoning Ability: It promotes a deeper level of understanding and reasoning, going beyond surface-level answers.

By introducing a two-phase approach of planning and execution, it not only enhances the accuracy of LLMs in zero-shot learning scenarios but also paves the way for their application in more diverse and complex tasks. The method's emphasis on structured problem-solving and error reduction makes it a valuable tool for advancing the capabilities of LLMs in reasoning and decision-making tasks.

PS+ Prompting

PS+ Prompting is an enhanced version of the Plan-and-Solve (PS) Prompting method designed to augment the reasoning capabilities of Large Language Models (LLMs). It builds upon the foundation laid by PS Prompting by incorporating additional detailed instructions for tasks such as variable extraction and calculation. This approach aims to further improve the quality of reasoning in complex problem-solving scenarios.

Understanding PS+ Prompting

1. Enhanced Focus on Details

- PS+ Prompting emphasizes more granular and detailed instructions compared to the original PS method. This includes explicit directives for identifying and extracting relevant variables and performing meticulous calculations.

2. Objective

- The primary goal is to reduce common errors in reasoning, specifically calculation errors and missing steps, thus enabling LLMs to produce more accurate and coherent responses in zero-shot learning scenarios.

Examples and Applications

Example 1: Mathematical Problem-Solving

- Problem: "John has twice as many apples as Tom. If Tom has 5 apples, how many does John have?"

- PS+ Prompting:

- Planning Phase:

- Identify the key relationship (John has twice as many apples).

- Extract the given variable (Tom’s apples = 5).

- Plan to calculate John’s apples based on the relationship.

- Execution Phase:

- Perform the calculation: John’s apples = 2 * Tom’s apples = 2 * 5 = 10.

- PS+ Element: Explicitly instructing the model to identify the relational factor (twice as many) and perform a multiplication operation.

- Planning Phase:

Example 2: Commonsense Reasoning

- Problem: "What should you do if you find a lost wallet on the street?"

- PS+ Prompting:

- Planning Phase:

- Identify actions that are generally taken when finding a lost item.

- Extract relevant actions applicable to a wallet (e.g., checking for identification, considering places to hand it in).

- Execution Phase:

- Suggest specific steps: Check for an ID to contact the owner, or hand it in to a nearby police station or lost and found.

- PS+ Element: Detailed instructions to consider ethical and practical aspects of the situation, such as privacy and appropriate places to report the found item.

- Planning Phase:

Advantages and Impact

- Improved Accuracy: By focusing on detailed instructions, PS+ Prompting allows LLMs to perform more accurate calculations and reasoning steps.

- Greater Clarity in Reasoning: The method encourages LLMs to delineate their thought processes more clearly, making their reasoning more transparent and understandable.

- Versatility in Application: Like PS Prompting, PS+ can be applied to various domains but with an added layer of precision and detail.

- Potential in Educational Settings: PS+ Prompting can be particularly beneficial in educational contexts where detailed problem-solving steps are essential for teaching and learning purposes.

By integrating more detailed instructions for variable extraction and calculation, it not only improves the accuracy of the models in zero-shot scenarios but also enhances the clarity and coherence of their reasoning processes. This method's emphasis on detailed, step-by-step problem-solving holds great promise for a wide range of applications, particularly in fields where precision and detailed reasoning are critical.

PS+ Prompting Results

The results of implementing PS+ Prompting in different reasoning domains reveal significant improvements over existing methods, particularly Zero-shot-CoT, and in some cases, it performs on par with few-shot methods. Let's delve into these results across three distinct reasoning domains: arithmetic, commonsense, and symbolic reasoning.

1. Arithmetic Reasoning

a. Comparison with Zero-shot-CoT

- Outperformance: PS+ Prompting consistently outperforms Zero-shot-CoT in arithmetic reasoning tasks.

- Reason: The structured approach of PS+ Prompting, with its focus on detailed planning and execution, leads to more accurate problem-solving, especially where calculations are involved.

b. Comparison with Few-shot Methods

- Competitive Performance: Interestingly, PS+ Prompting shows comparable results to few-shot methods.

- Significance: This is noteworthy because few-shot methods typically rely on specific examples for learning, suggesting that PS+ Prompting effectively simulates this learning with its detailed instructions, even in a zero-shot context.

2. Commonsense Reasoning

a. Superiority Over Zero-shot-CoT

- Better Performance: In commonsense reasoning tasks, PS+ Prompting demonstrates superior performance compared to Zero-shot-CoT.

- Reasoning Quality: The method's emphasis on detailed planning and execution likely enables the LLM to consider various aspects of a situation more thoroughly, leading to more coherent and contextually appropriate responses.

b. Practical Implications

- Applicability: This improvement is crucial in real-world scenarios where commonsense reasoning is essential, such as in AI-assisted decision-making systems.

3. Symbolic Reasoning

a. Excelling in Certain Datasets

- Outperforming Zero-shot-CoT: PS+ Prompting shows superior results in certain symbolic reasoning datasets compared to Zero-shot-CoT.

- Dataset Specificity: The performance indicates that the effectiveness of PS+ Prompting may be particularly pronounced in certain types of symbolic reasoning tasks.

b. Competitiveness with Manual-CoT

- Competing with Few-shot Learning: In some cases, PS+ Prompting rivals the performance of Manual-CoT, which is a few-shot learning approach.

- Implication: This suggests that PS+ Prompting can potentially reduce the need for labor-intensive few-shot learning setups, especially in tasks that require symbolic manipulation or abstract reasoning.

The results of PS+ Prompting across these three domains are highly encouraging. They demonstrate not only the method's effectiveness in improving LLMs' performance in zero-shot learning scenarios but also its potential to rival or even surpass traditional few-shot learning methods in certain contexts. This advancement represents a significant step in the development of more efficient, accurate, and versatile LLMs for various applications, from academic and educational tools to AI-driven decision-making systems.

Error analysis

Error analysis in the context of PS+ Prompting versus Zero-shot-CoT (Chain-of-Thought) reveals critical insights into how PS+ Prompting improves the accuracy and reliability of Large Language Models (LLMs) in complex reasoning tasks. Let's delve into this comparison in detail:

Understanding the Nature of Errors in Zero-shot-CoT

1. Calculation Errors

- Description: These errors occur when an LLM incorrectly calculates numerical values or performs arithmetic operations.

- Impact: In tasks requiring precise numerical answers, such as arithmetic problems, these errors directly lead to incorrect solutions.

2. Missing-Step Errors

- Description: These errors happen when an LLM skips necessary intermediate steps in a reasoning sequence.

- Impact: The omission of crucial reasoning steps can lead to incomplete or flawed logic, resulting in incorrect conclusions, especially in multi-step problems.

How PS+ Prompting Addresses These Errors

1. Structured Approach to Problem Solving

- Planning Phase: By first creating a plan, the LLM identifies and outlines all necessary steps and components required to solve the problem.

- Execution Phase: Each step of the plan is then executed sequentially, ensuring no critical parts of the reasoning process are overlooked.

2. Detailed Instructions for Variable Extraction and Calculation

- Variable Extraction: PS+ Prompting explicitly instructs the LLM to identify and extract all relevant variables. This ensures that all necessary information is considered before proceeding to the calculation phase.

- Calculation Guidance: Detailed instructions for performing calculations help the LLM to focus on accuracy and precision in numerical operations.

3. Reduction in Errors

- Calculation Errors: With explicit focus on correctly performing calculations, PS+ Prompting significantly reduces the incidence of calculation errors.

- Missing-Step Errors: By structuring the reasoning process into a clear plan followed by execution, PS+ Prompting ensures that all necessary reasoning steps are considered and executed, thereby reducing missing-step errors.

Comparative Error Analysis

1. Error Rates

- Zero-shot-CoT: This approach, while innovative, can exhibit higher rates of calculation and missing-step errors due to its less structured approach.

- PS+ Prompting: Shows a marked reduction in these error types, as indicated by empirical results from various reasoning tasks.

2. Implications for Model Reliability

- Increased Reliability: By reducing these errors, PS+ Prompting enhances the reliability of LLMs for tasks where accuracy in reasoning and calculations is paramount.

- Broadened Applicability: This improvement broadens the potential applications of LLMs, making them more suitable for educational, scientific, and technical domains where precision is crucial.

The error analysis of PS+ Prompting vis-à-vis Zero-shot-CoT demonstrates how a more structured and detailed approach in problem-solving can significantly enhance the accuracy and reliability of LLMs. By meticulously guiding the models through each step of the reasoning process and emphasizing precise calculations and variable extraction, PS+ Prompting addresses two of the most common pitfalls in LLM reasoning, paving the way for their more effective deployment in a wider range of complex reasoning tasks.

Self-Consistency (SC) in LLMs

Self-consistency (SC) evaluation, when applied to PS+ Prompting, plays a crucial role in enhancing the performance of Large Language Models (LLMs) in complex reasoning tasks. This approach is particularly effective, as demonstrated in the GSM8K and SVAMP datasets. Let's explore how self-consistency augments PS+ Prompting:

Understanding Self-Consistency (SC) in the Context of LLMs

1. Concept of Self-Consistency:

- SC involves generating multiple reasoning outputs or answers for the same problem and then aggregating these outputs to determine the most consistent answer.

- This technique is akin to obtaining several opinions on a complex issue and then finding the common thread or consensus among them.

2. Application to PS+ Prompting:

- In PS+ Prompting, SC can be implemented by prompting the LLM multiple times for the same problem and analyzing the variety of reasoning paths and solutions it provides.

Impact of SC on PS+ Prompting

1. Reduction in Randomness and Error:

- Diverse Reasoning Paths: By generating multiple answers, LLMs explore various reasoning paths, reducing the chance of adhering to a single erroneous line of thought.

- Error Mitigation: The aggregation of multiple outputs allows for the identification and correction of sporadic errors in reasoning or calculation.

2. Improved Accuracy:

- Consensus Finding: The process of finding the most common or consistent answer among multiple outputs tends to yield more accurate results.

- Verification Mechanism: SC acts as a verification mechanism, where the model's outputs are cross-checked against each other.

Empirical Evidence from GSM8K and SVAMP Datasets

1. Performance Enhancement:

- In both the GSM8K and SVAMP datasets, the application of SC to PS+ Prompting has shown noticeable improvements in performance.

- These datasets, which consist of complex math word problems, benefit significantly from the enhanced reasoning quality brought about by SC.

2. Case Studies:

- Example from GSM8K: For a problem involving multiple steps of calculations, SC helps in converging towards the most accurate calculation, as different outputs may vary slightly in numerical reasoning.

- Example from SVAMP: In a problem requiring understanding the context and sequential reasoning, SC can identify the most logical sequence of steps, thereby improving the model's accuracy.

The integration of Self-Consistency with PS+ Prompting represents a significant advancement in the field of AI reasoning. By leveraging multiple outputs and focusing on the most consistent ones, LLMs are able to achieve greater accuracy and reliability. This approach is particularly beneficial in tackling complex reasoning problems, as evidenced by the improved performance in the GSM8K and SVAMP datasets. It illustrates the potential of combining advanced prompting techniques with robust evaluation methods to enhance the capabilities of LLMs.

A PS+ Prompting Framework for Enhanced Reasoning

Based on the insights and methodologies discussed in the paper about Plan-and-Solve (PS) and its enhanced version, PS+ Prompting, we can propose a comprehensive framework for improving the reasoning capabilities of Large Language Models (LLMs) in various problem-solving scenarios. This framework would incorporate the principles of detailed planning, execution, self-consistency evaluation, and error analysis to optimize LLM performance.

Framework for Enhanced Reasoning in LLMs

1. Initial Problem Analysis

- Problem Identification: Classify the problem into categories such as arithmetic reasoning, commonsense reasoning, or symbolic reasoning.

- Key Component Extraction: Automatically extract key components or variables from the problem statement.

2. PS+ Prompting Mechanism

- Planning Phase:

- Develop detailed instructions for the LLM to devise a plan, breaking the problem into smaller subtasks.

- Ensure the inclusion of all necessary variables and relationships identified in the initial analysis.

- Execution Phase:

- Guide the LLM to sequentially execute each subtask.

- Focus on detailed calculations, logical reasoning, and coherence in the execution.

3. Self-Consistency (SC) Implementation

- Multiple Reasoning Paths: Generate multiple outputs for the same problem using the PS+ prompting structure.

- Consensus Analysis: Employ algorithms to analyze the outputs and identify the most consistent and logical solution.

4. Error Analysis and Correction

- Identify Errors: Analyze the LLM outputs for common errors, such as calculation inaccuracies and missing logical steps.

- Feedback Loop: Use identified errors to refine the PS+ prompting instructions and improve the model's reasoning capabilities.

5. Performance Evaluation

- Dataset-Specific Evaluation: Test the framework using diverse datasets like GSM8K and SVAMP to evaluate its effectiveness across different problem types.

- Metrics Measurement: Measure performance improvements using accuracy, error reduction, and consistency as key metrics.

6. Iterative Refinement

- Continual Learning: Incorporate feedback from performance evaluations to continually refine the prompting mechanism and SC implementation.

- Model Adaptation: Adapt the framework to different LLMs and problem types, ensuring versatility and scalability.

Implementation Considerations

- User Interface: Develop a user-friendly interface for problem input and solution visualization.

- Customization: Allow customization of the framework for specific domains or user requirements.

- Scalability: Ensure the framework can scale to accommodate larger or more complex datasets and more advanced LLMs.

This proposed framework aims to significantly enhance the problem-solving abilities of LLMs by combining the strengths of PS+ Prompting with self-consistency techniques and robust error analysis. By continuously refining the framework based on performance feedback, it can be adapted to a wide range of reasoning tasks, making it a versatile tool in the advancement of AI reasoning capabilities.

PS+ Prompting with Self-Consistency

To effectively utilize the Plan-and-Solve Plus (PS+) Prompting framework in conjunction with Self-Consistency (SC) for Large Language Models (LLMs), a structured workflow is essential. This workflow can ensure that the LLM efficiently processes and solves complex problems. Let's outline a practical workflow using a specific example:

Workflow for PS+ Prompting with Self-Consistency

Step 1: Problem Identification

- Input: Receive and classify the problem.

- For example: A math word problem, "A farmer has 15 apples and gives away 5. How many does he have left?"

Step 2: Initial Problem Analysis

- Key Component Extraction: Identify key information and variables.

- Example Analysis: Total apples = 15, apples given away = 5.

Step 3: PS+ Prompting Setup

- Planning Phase: Formulate a detailed plan to solve the problem.

- Execution Phase: Prepare instructions for executing each step of the plan.

- Example Plan:

- Step 1: Identify total number of apples.

- Step 2: Determine number of apples given away.

- Step 3: Calculate remaining apples.

Step 4: Self-Consistency (SC) Implementation

- Multiple Reasoning Paths: Generate multiple solutions using the PS+ Prompt.

- Example: Run the problem through the LLM multiple times to get different reasoning chains.

Step 5: Consensus Analysis

- Aggregate Outputs: Analyze multiple solutions for consistency.

- Example: Assess different answers and reasoning paths to identify the most common solution.

Step 6: Error Analysis and Correction

- Identify and Analyze Errors: Look for calculation mistakes or logical inconsistencies in the LLM outputs.

- Example: Check if any calculation errors or missed steps occurred in different outputs.

Step 7: Solution Finalization

- Determine Final Answer: Based on the most consistent and error-free solution.

- Example Result: The farmer has 10 apples left.

Step 8: Performance Evaluation and Feedback

- Evaluate Effectiveness: Assess the accuracy and efficiency of the solution.

- Iterative Improvement: Use insights from the evaluation to refine future PS+ prompts and SC implementations.

Application: Solving the Example Problem

- Problem Input: "A farmer has 15 apples and gives away 5. How many does he have left?"

- Extract Information: Total = 15 apples, Given Away = 5 apples.

- Plan and Execute with PS+ Prompting:

- Plan: Subtract the number of apples given away from the total.

- Execute: Calculate 15 - 5.

- Generate Multiple Outputs with SC:

- Run the problem multiple times.

- Collect different reasoning chains.

- Analyze for Consensus:

- Most outputs indicate 10 apples as the answer.

- Error Analysis:

- Confirm no calculation errors or logical fallacies.

- Finalize Solution:

- Confirm the farmer has 10 apples left.

- Evaluate and Refine:

- Assess the process for any inefficiencies or areas for improvement.

This workflow demonstrates how to efficiently apply the PS+ Prompting framework with Self-Consistency to enhance LLMs' problem-solving abilities. By following this structured approach, it is possible to systematically address and solve complex problems, ensuring accuracy and reliability in the model's outputs.

PS+ Prompting framework with Self-Consistency (SC)

The PS+ Prompting framework with Self-Consistency (SC), as outlined in the workflow, can be effectively utilized in the realm of prompt engineering for Large Language Models (LLMs). Prompt engineering involves crafting input prompts that guide LLMs to produce more accurate, relevant, and contextually appropriate responses. Here's how the PS+ and SC framework can enhance prompt engineering:

Integration in Prompt Engineering

1. Structured Problem-Solving Approach

- Detailed Instructions: The framework encourages the development of prompts that include detailed, step-by-step instructions, leading LLMs through a structured reasoning process.

- Application: In prompt engineering, this approach can be used to design prompts that systematically guide the LLM through complex tasks, ensuring each necessary step is considered.

2. Enhanced Reasoning Quality

- Variable Extraction and Calculation: By emphasizing the importance of identifying relevant variables and performing accurate calculations, the framework can be applied to craft prompts that enhance the LLM’s focus on these key aspects.

- Application: This can be particularly useful in fields such as data analysis, financial forecasting, or technical problem-solving, where precision in handling data and numbers is crucial.

3. Self-Consistency for Error Reduction

- Multiple Outputs for Consensus: The framework’s use of SC to generate multiple outputs and then find a consensus can be applied in prompt engineering to design prompts that encourage the generation of diverse reasoning paths.

- Application: This method can be particularly beneficial in scenarios where there is more than one plausible solution, such as in creative problem-solving or scenario analysis.

4. Adaptability to Different Domains

- Flexible Prompt Design: The framework’s principles are adaptable to a wide range of domains, allowing for the creation of specialized prompts based on the specific requirements of different fields.

- Application: Prompts can be engineered to suit various applications, from educational purposes, where detailed explanations are necessary, to industry-specific tasks that require domain-specific knowledge and reasoning.

Practical Example: Educational Problem-Solving

- Problem: "A class has 20 students. If 40% are absent one day, how many students are present?"

- PS+ Prompt Engineering:

- Create a prompt that guides the model to first calculate the number of absent students (20 * 40%) and then subtract this from the total (20 - number of absent students).

- SC Implementation:

- Design the prompt to encourage the model to approach the problem in multiple ways, for example, direct subtraction or complementary percentage calculation (100% - 40% = 60% present).

- Use in Education:

- Such prompts can be used to create educational tools that not only provide answers but also demonstrate different methods of solving a problem, enhancing learning and understanding.

The integration of the PS+ and SC framework in prompt engineering represents a significant advancement in the utility and effectiveness of LLMs. By crafting prompts that incorporate detailed planning, execution steps, and self-consistency checks, it's possible to significantly enhance the LLMs' problem-solving capabilities. This approach is versatile, adaptable to various domains, and can be particularly transformative in educational and technical applications where detailed and accurate reasoning is paramount.

PS+ Prompting framework with Self-Consistency (SC) Case Study

Let's conduct a detailed case study using the PS+ Prompting framework with Self-Consistency (SC) applied in the context of an educational tool for teaching mathematical problem-solving. This case study will illustrate how the framework can be utilized to create effective prompts for a Large Language Model (LLM) like GPT-3, focusing on improving its ability to solve and explain math problems.

Case Study: Educational Math Problem-Solving Tool

Background

- Objective: To develop a tool that helps students learn mathematical concepts and problem-solving strategies.

- Target Users: Middle school students struggling with arithmetic word problems.

- Problem Type: Arithmetic word problems involving multiple steps and concepts.

Problem Example

- Problem Statement: "A farmer has 50 apples. She sells some at the market and has 20 apples left. How many apples did she sell?"

Step-by-Step Application of PS+ and SC

Step 1: Initial Prompt Development

- PS+ Prompting:

- Prompt: "Let's solve this step by step. First, determine the total number of apples the farmer initially had and the number left after selling."

Step 2: Enhancing the Prompt for Detail

- Refined PS+ Prompt:

- Prompt: "The farmer started with a certain number of apples and sold some. To find out how many she sold, subtract the number of apples she has left from the total number she started with. What is the initial number of apples and what is the remaining number?"

Step 3: Implementing Self-Consistency

- SC Enhanced Prompt:

- Prompt: "Think of two ways to solve this. One method is direct subtraction. Another might involve calculating the percentage sold. Compare these methods and decide which gives a clear answer."

Step 4: Error Checking and Iterative Refinement

- Prompt for Error Analysis:

- Prompt: "After solving the problem, review your steps. Are there any calculation errors or steps you might have missed? Explain why your answer is correct."

Analysis and Outcome

1. Model Response Analysis

- The LLM provides two solutions: one using direct subtraction (50 - 20) and another calculating the percentage sold and then the number.

- It explains each step, ensuring the reasoning is clear and educational.

2. Evaluating Educational Efficacy

- The solutions demonstrate different methods, enhancing educational value.

- The error-checking step encourages critical thinking and self-assessment.

3. Refinement Based on Student Feedback

- If students find one method clearer than the other, future prompts can be adjusted to emphasize that method.

- Feedback on the explanation's clarity can be used to further refine the prompt's structure.

This case study demonstrates how the PS+ Prompting framework, augmented with SC, can be effectively used to create educational tools that aid in learning and understanding complex concepts. By guiding the LLM through a structured problem-solving process and encouraging the exploration of multiple methods, the tool not only provides answers but also fosters a deeper understanding of the underlying mathematical principles. This approach, with its emphasis on clarity, step-by-step reasoning, and error analysis, is particularly valuable in educational settings where learning the process is as important as finding the correct answer.

FAQs

1. What are the main advantages of PS+ prompting over traditional Zero-shot-CoT?

- Structured Approach: PS+ prompting divides complex problems into smaller, manageable subtasks, enabling a more organized and clear thought process.

- Error Reduction: It significantly reduces calculation and missing-step errors by providing detailed instructions for each step of the problem-solving process.

- Enhanced Reasoning Quality: With specific guidance on variable extraction and intermediate calculations, PS+ prompting leads to more accurate and logically sound reasoning.

Example: In solving a complex arithmetic problem, PS+ would guide the LLM to first identify key numbers and relationships, then sequentially address each part of the problem, reducing the likelihood of overlooking critical steps.

2. How does PS+ prompting improve calculation accuracy in LLMs?

- Focused Calculation Instructions: PS+ explicitly instructs LLMs to pay attention to calculations, leading to a more careful and accurate computation process.

- Intermediate Result Checking: By prompting LLMs to calculate and verify intermediate results, PS+ reduces the accumulation of errors in multi-step problems.

Example: When calculating the area of a complex geometric figure, PS+ would prompt the LLM to break down the figure into simpler shapes, calculate each area separately, and then sum them up, checking each step for accuracy.

3. Can PS+ prompting be applied to non-mathematical reasoning tasks?

- Versatility: Yes, PS+ prompting is adaptable to various types of reasoning tasks, including commonsense and symbolic reasoning.

- Customizable Prompts: The strategy can be tailored to suit the specific requirements of different reasoning domains.

Example: In a commonsense reasoning task like planning an event, PS+ could guide the LLM to consider factors like venue capacity, event purpose, and attendee preferences in a step-by-step manner.

4. What are some potential real-world applications of PS+ prompting?

- Education: Assisting in complex problem-solving and tutoring in various subjects.

- Business Analytics: Enhancing decision-making processes by breaking down complex market data.

- Medical Diagnostics: Aiding in diagnosing diseases by systematically analyzing symptoms and medical histories.

Example: In a business setting, PS+ could help analyze market trends by sequentially examining different economic indicators and their interrelations.

5. How might future developments in LLMs impact the effectiveness of PS+ prompting?

- Advanced Understanding: Future LLMs with more nuanced comprehension could make PS+ prompting more effective in understanding and solving complex problems.

- Adaptability: Enhanced LLMs could improve the adaptability of PS+ prompting across various languages and cultural contexts.

- Self-Improving Algorithms: Future developments might include LLMs that can learn from past interactions, continually refining the effectiveness of PS+ prompting.

Example: An advanced LLM might apply PS+ prompting to a legal case study, understanding not only the legal statutes but also the nuances of the case, leading to more sophisticated reasoning and argumentation.