While generative AI tools like ChatGPT offer efficiency gains, their use by legal professionals raises serious concerns about accuracy, confidentiality, and ethics that demand caution and regulatory guidance.

This Article Covers:

Key Challenges and Concerns

- Ensuring Accuracy and Accountability

- Confidentiality and Client Trust

- Staying Current with AI's Rapid Pace of Change

- The Opacity of AI's "Black Box"

- The Specter of Algorithmic Bias

- Cybersecurity Vulnerabilities

- Navigating Ethical Quandaries

Guidance Emerging for Responsible AI Use

- Bar Associations Developing Policies and Frameworks

- Following Developing Best Practices

Strategies for Effective Adoption

- Mastering Prompt Engineering

- Rigorous Human Review and Validation

- Transparent Communication with Clients

- Evaluating and Mitigating Risks

Introduction

Generative AI tools like ChatGPT have captured attention for their ability to generate human-like text on command. For legal professionals, these tools promise potential gains in efficiency by automating certain writing tasks. However, their capabilities also pose risks related to the accuracy, security, and ethical use of AI. As this technology continues advancing rapidly, regulatory guidance is needed to harness its benefits while protecting clients and the legal system.

What is Generative AI (GenAI)

Generative AI refers to artificial intelligence systems that utilize neural networks trained on massive datasets to generate new content such as text, images, audio, and video.

Drawing insights from patterns in the training data, generative AI models can produce original outputs that possess some of the style, structure and characteristics of their inputs. This ability to synthesize data makes generative AI useful for a wide range of applications from content creation to data augmentation.

However, due to its data-driven nature, generative AI also risks inheriting biases and limitations present in the training data. Thoughtful oversight is required to ensure generative AI is applied responsibly and ethically.

LLMs and Their Limitations

The type of Generative AI we awould be discussing moving forward are called Large Language Models, thins includes ChatGPT and Claude to name a few.

LLM or large language model refers to a type of generative AI system trained on massive text datasets to generate coherent writing or engage in conversation. By learning linguistic patterns, LLMs can produce human-like text while carrying risks of bias from the training data.

Open-Source vs Closed Source LLMs

When evaluating large language models for usage, a key distinction is whether the LLM is open source or closed source. Open source LLMs like Meta's LLaMA are publicly available, allowing full visibility into their training process and results. This transparency aids responsible adoption.

In contrast, closed source proprietary LLMs like OpenAI's GPT-3 offer limited insight into their inner workings, datasets, and content filters.

Open Source LLMs

- Publicly available and transparent about training data and methods

- Promotes responsible adoption through full visibility into capabilities and limitations

- Can be self-hosted and does not need to be connected to the internet

- Examples include Meta's LLaMA

Closed Source Proprietary LLMs

- Limited visibility into training data, content filters, and workings of the model

- Lack of transparency introduces risks around biases, security, ethics

- May offer advantages like large scale computing resources

- Offers best in-class performance and abilities

- Examples include OpenAI's GPT-3

When adopting LLMs, carefully weigh benefits and risks of open vs closed source. Blending both open and closed may enable an optimal approach but transparency and human oversight are critical regardless of source

The Role of LLMs

- LLMs, or Language Models, are at the heart of many modern Generative AI systems, particularly in the realm of natural language processing.

- Their primary function is to predict the next word or sequence of words in a given context, allowing for applications such as text generation, summarization, and question answering.

There are two broad categories of LLMs to consider: Open source and closed source

Limitations of LLMs

- LLMs, despite their prowess, are not without their limitations. These limitations include:

- A restricted context window, often constrained by token limits.

- Potential for data hallucinations or generating information not grounded in reality.

- Biases in data that can reflect in their outputs.

The Efficiency Promise of Generative AI

For lawyers and legal professionals, ChatGPT and similar natural language AI tools offer a compelling value proposition: the ability to quickly generate legal documents, research memos, and other writing simply by describing what you need. Instead of hours painstakingly drafting contracts or memos, the promise is that AI can deliver a competent first draft with just a few prompts. For bustling law firms and legal departments, such efficiency gains appear highly attractive.

Harnessing AI's Potential to Augment Legal Research and Analysis

When applied judiciously, generative AI has immense potential to assist lawyers with certain research and analysis tasks:

Automated Document Review - By rapidly analyzing large volumes of legal documents, generative AI can save attorneys time by surfacing relevant information, clauses, and evidence. This supports tasks ranging from discovery to due diligence. However, human oversight is critical to validate relevance.

Understanding New Legal Concepts - AI tools can help lawyers quickly grasp new or unfamiliar legal ideas by identifying patterns in related data sources. This provides useful context and background when encountering novel issues.

Due Diligence Support - Generative AI can aid in due diligence by flagging potential issues and risks in documents based on patterns and outliers. This allows lawyers to more efficiently prioritize what requires further examination.

Current Insights - AI can rapidly synthesize large amounts of current case law, rulings, and news to inform lawyers' understanding of fast-evolving legal topics. This equips attorneys to give clients well-grounded advice.

Enhanced Case Strategy - By assessing previous similar cases and legal strategies, generative AI can provide ideas to help lawyers craft more compelling and innovative arguments. But human creativity remains essential.

Bolstering Confidence - As a research force-multiplier, AI can give lawyers greater confidence in their mastery of facts and legal nuances heading into cases. However, maintaining ethical standards remains paramount.

When applied carefully as a supplement rather than a crutch, generative AI can empower lawyers to provide clients first-rate representation built on enhanced research capabilities. But ethical, accountable usage requires uncompromising human oversight. AI should enhance legal expertise, not replace it.

The Challenges of Generative AI in Legal Practice

Ensuring Accuracy and Accountability in AI-Generated Legal Work

Among the chief concerns surrounding generative AI is the accuracy of its outputs. When creating legal documents or analysis, even minor factual errors or misstatements of law can have significant consequences. Yet current systems like ChatGPT frequently generate demonstrably incorrect information.

Relying on these tools to produce first drafts of client work without extensive human review and validation invites unacceptable risks. Substandard legal advice based on AI errors exposes lawyers to professional liability and disciplinary action. Small mistakes in automatically generated contracts could also lead to unfavorable outcomes.

Equally importantly, accuracy problems undermine client interests and public trust in the profession. Generative AI's lack of contextual understanding means its output cannot be assumed to reflect ground truth without diligent verification.

Addressing this will require lawyers to implement rigorous quality control and validation processes. Generative AI should only be utilized in ways compatible with ethics rules on competence. Maintaining professional accountability means bringing critical thinking rather than blind faith in using these tools.

With care, oversight, and continuous improvement, generative AI's accuracy issues are not insurmountable. But unlocking its responsible use for lawyers starts with recognizing today's limitations. Clients come first, and for now, that means treating AI-generated work as only the starting point.

Upholding Confidentiality and Trust

Another one of the deepest concerns posed by generative AI is maintaining client confidentiality. Lawyers have an uncompromising ethical duty to keep client information private and secure. But the data practices of AI providers raise alarms.

Many generative AI tools rely on vast datasets scraped from the public web or provided by users. The potential exists for client details to be exposed, intentionally or not, via these feeds. Confidences could also leak through AI conversational responses.

Equally troubling is the opacity around provider data policies. Lawyers have limited insight into how client data is secured, who can access it, and the purposes it is used for. At minimum, this fosters perception problems for clients concerned about privacy.

And while AI developers pledge commitment to confidentiality, rapid commercial evolution of these technologies breeds distrust. Generative AI's hunger for data has the potential to mak lawyers and clients its product, not just its users.

Addressing these concerns will necessitate strict usage controls, auditing what data is revealed to AI, and intense scrutiny of provider policies. Lawyer oversight of AI must ensure clients' privacy interests come first.

More broadly, generative AI's data practices threaten to undermine legal professions' duty of confidentiality that undergirds public trust. Strict ethical standards and protections must be maintained in the AI era. Clients entrust lawyers with their hopes, fears and secrets. This covenant cannot be forfeited at the altar of innovation.

Staying Abreast of AI's Breakneck Pace of Change

One of the defining traits of generative AI systems like ChatGPT is their blistering pace of evolution. These technologies are not stagnant - they are constantly being updated with new techniques, data and capabilities. While this propels rapid improvements, it also poses challenges for legal professionals to stay current.

In the span of mere months, ChatGPT has already demonstrated stunning advances in the coherence of its writing and conversational abilities. Yet despite these gains, its skills remain imperfect. Blindly assuming further progress will address all deficiencies would be misguided.

However, not closely tracking AI's advancement risks missing important improvements or being caught unprepared as new capabilities emerge. Without keeping up, professionals may use AI under outdated assumptions or in ways no longer ethical or advisable.

Regularly reviewing changes to major generative AI tools is now an imperative. Following expert commentary and guidance from ethics bodies will be key to staying informed amidst AI's whirlwind evolution. Testing updated versions and refreshing one's knowledge should become routine.

The pace of change also underscores the value of adaptable frameworks over hard-and-fast rules for AI usage. Rigid policies quickly become outdated. Core principles of ethics and accountability should guide appropriate usage as capabilities morph.

Yes, AI's relentless progress promises ever-increasing utility. But legal professionals have a duty to doggedly keep up with the state-of-the-art to ensure they employ it properly. Riding this wave of constant change requires both eternal vigilance and a flexible mindset.

The Black Box Problem: AI's Lack of Transparency

A significant drawback of generative AI systems like ChatGPT is their lack of transparency. When these AI tools generate content or provide analysis, it can be nearly impossible to understand their reasoning process. This opacity poses risks for legal usage.

Generative AI systems rely on neural networks and statistical models to produce their outputs. But the underlying logic behind their text and conclusions is not revealed or easily interpreted. Unlike a human lawyer carefully explaining their analysis in a memo, the AI simply delivers an end product.

This black box nature makes it difficult to vet the accuracy and soundness of generative AI's work. Without transparency into its reasoning, lawyers cannot properly evaluate if AI-generated documents or legal viewpoints are logically rigorous and complete.

The lack of explainability also creates dilemmas related to ethics rules on competence and communication with clients. If lawyers do not comprehend an AI system's approach, they may fall short of their duty to independently assess its suitability. And opaque AI conclusions hamper clear communication with clients about the basis for legal advice.

Overall, generative AI's lack of transparency means lawyers relying heavily on these tools do so largely on faith. Until AI systems can be imbued with more interpretability, their utility for legal professionals will remain limited. Responsible usage requires recognizing this opacity and mitigating associated risks.

The Looming Threat of Algorithmic Bias

In addition to transparency issues, generative AI poses another subtler but equally serious risk: algorithmic bias. Because these systems are trained on vast datasets, any biases or skewed perspectives in that training data get propagated into the AI's outputs. Left unchecked, this can lead to discriminatory and unethical results.

For instance, an AI trained primarily on legal documents authored by male lawyers may inadvertently generate advice that overlooks female perspectives. Or overreliance on data from specific industries could result in legal documents unsuitable for other business contexts.

These biases become especially problematic when generative AI is used to create content related to people from marginalized groups. Without diligent mitigation of bias, AI could generate offensive or prejudiced language about protected classes.

For lawyers, ethical obligations around diversity, equality, and fairness mean generative AI usage without proper bias evaluation is highly risky. AI-generated documents or viewpoints underpinned by blind spots or prejudice clearly violate professional duties.

Addressing risks of unfair outputs requires ongoing bias testing, auditing algorithms for issues, and ensuring diverse data is used for training. As long as biases persist in data, generative AI carries the potential for inadvertently inequitable results.

Curbing Cybersecurity Dangers in Legal AI

Lawyers have an ethical duty to safeguard confidential client information. But generative AI introduces new data security vulnerabilities that demand vigilance. These systems' reliance on vast data stores and cloud-based neural networks creates potential for cyberattacks and data breaches.

For instance, a confidential memo created by AI could be exposed in a breach of the vendor's systems. Client details used to train custom AI tools may also be jeopardized in a hack. AI is also susceptible to data poisoning - malicious actors can corrupt training data to skew outputs and conclusions.

Additionally, generative AI can produce security risks through inadvertent data leakage. An AI assistant conversing with users could accidentally disclose sensitive details through its responses. The data collection policies of some AI vendors also raise questions about access to usage information.

For legal professionals, such vulnerabilities highlight the need for strict security safeguards when using generative AI. Vetting providers, maximizing encryption, controlling data access, and implementing cybersecurity best practices is critical. AI should be deployed in a walled garden separated from other systems.

Equally important is restricting the information provided to generative AI to only what is required for a specific purpose. With cyber threats constantly evolving, maintaining rigorous data security is essential for responsibly leveraging these tools. Generative AI warrants extra precautions to avoid becoming a new attack vector against law firms and legal departments.

The Ethical Quandary

Most importantly, legal ethics rules prohibit lawyers from outsourcing work in a way that compromises competent representation, maintains client confidence, and upholds other duties. Generative AI's role, if any, in delivering ethical legal services remains uncertain and hotly debated. Tasks like communicating advice to clients may cross ethical lines if AI is involved without transparency. How legal professionals can use technologies like ChatGPT ethically merits serious examination.

Balancing SaaS Convenience with On-Premise Control

Many emerging generative AI vendors rely on cloud-based or SaaS delivery models. But for law firms handling sensitive cases, trusting data to external parties may be untenable. This is spurring interest in on-premise AI offerings.

With SaaS AI, legal professionals relinquish control over data to the vendor and underlying infrastructure providers. For those handling client matters involving public figures or controversial issues, this lack of control is troubling. Data could be exposed via security lapses or employee misuse.

Firms working on confidential cases may therefore require on-premise AI tools where data stays under their control. While more complex to deploy, on-premise solutions avoid third-party data risks altogether. AI is managed internally within the law firm's own systems and servers.

However, on-premise AI also has downsides like higher costs and limited scalability. Further, internal AI systems require extensive diligence to secure against threats like data exfiltration by malicious insiders.

Hybrid options are emerging to balance these tradeoffs. For instance, some vendors offer managed private cloud AI where only the law firm has data access. Others allow training custom models using confidential data without retaining or accessing it.

There is no one-size-fits-all answer. Legal professionals handling highly sensitive matters may lean toward on-premise AI or private cloud options. Those less concerned about third-party data exposure can utilize public cloud services with proper security reviews. Above all, the solution must align with a firm's unique risk profile.

For legal professionals and law firms exploring generative AI options, fully assessing your needs and risks is essential to determine the most appropriate deployment strategy.

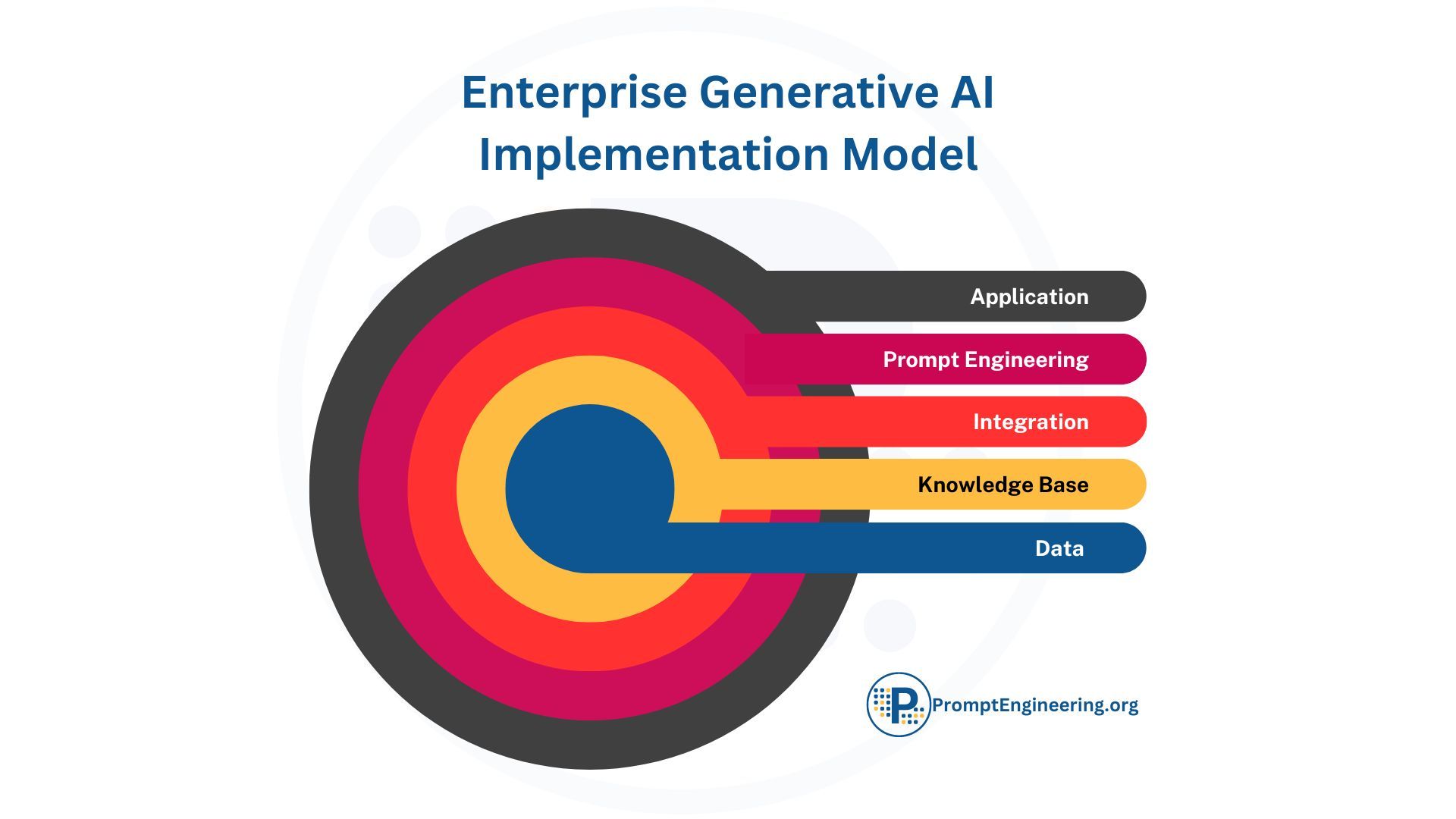

Whether SaaS, on-premise, or a hybrid approach, the solution must align with your specific requirements for security, ethics, and responsible innovation. For a structured methodology to implement a generative AI adoption tailored to your organization, review the article "A Strategic Framework for Enterprise Adoption of Generative AI."

This piece provides a detailed framework of considerations across areas like use cases, data strategy, controls, and change management. Taking a systematic approach will enable your firm to strategically navigate the generative AI landscape in a way that manages risk and realizes benefits. The framework supplies an excellent baseline to inform your planning and decision-making.

Mastering Prompt Engineering to Maximize AI's Value

Simply using generative AI tools out-of-the-box is unlikely to produce satisfactory legal results. To properly guide these systems, lawyers must master prompt engineering techniques. Methods like chain of thought reasoning and tree of thought prompting can dramatically improve output.

With chain of thought prompting, lawyers structure a series of leading questions to walk the AI through logical reasoning steps. This hews closer to an attorney's analytical process. Tree of thought prompting lays out prompts in a branch-like flow to explore different aspects of an issue.

These prompting tactics cue the AI to emulate a lawyer's structured thinking on problems. Without such techniques, generative AI tends to deliver only surface-level responses lacking nuance.

Expertly engineering prompts requires understanding the AI's strengths and limitations to frame appropriate guidance. Lawyers should consult best practices and tap technical expertise where needed.

But with patience and practice, prompt engineering expertise unlocks generative AI's potential while mitigating risks. It allows lawyers to better encode their unique legal skills and knowledge into outputs. Responsible and beneficial AI usage in law demands prompts as meticulously crafted as successful arguments.

The Need for Guidance

Fortunately, guidance is emerging to address these AI concerns, from new committees and task forces focused on developing best practices and policies.

Groups like state bar associations are analyzing ethical and liability risks, with the goal of providing lawyers a regulatory framework for safely leveraging AI efficiencies while avoiding pitfalls. Lawyers owe clients diligent representation, so using AI appropriately requires adhering to these developing norms.

The Legal Community Responds

Recognizing the challenges, several bar associations have taken proactive steps to provide clarity and guidance.

- New York State Bar Association: Announced in July, a task force is set to delve deep into the implications of AI. By examining both its benefits and dangers, the aim is to draft policies and suggest legislation for responsible AI use.

- Texas State Bar: A workgroup is in place with the goal of unraveling the ethical intricacies and practical applications of AI. Their insights will shape the state bar's AI-related policies.

- California Bar: An advisory ethics opinion is in the works, set for release in November. The committee will shed light on the pros and cons of employing AI in legal practices, ensuring that lawyers can leverage AI without breaching ethical boundaries.

Empowering Lawyers with Knowledge

As generative AI continues rapidly evolving, there is a pressing need for guidance tailored specifically to aid legal professionals in leveraging these tools appropriately. While the promises of efficiency and automation are exciting, understanding the practicalities of using AI like ChatGPT effectively and ethically is essential.

While awaiting official guidance, legal professionals aren't left in the dark. We are the PromptEngineering.org offers a growing body of resources to aid in understanding and effectively utilizing generative AI.

The rapid pace of evolution in AI means ongoing training will be critical. But with the right guidance, lawyers can stay ahead of the curve on leveraging these promising technologies to augment their expertise. The planned learning materials signify an important step toward responsible AI adoption in the legal sector.

The advent of generative AI marks a watershed moment for legal professionals. Its potential to enhance efficiency through automation is astounding yet fraught with risks. By embracing emerging guidance and honing prompt engineering expertise, lawyers can thoughtfully harness AI's power while safeguarding ethics.

But in the rapidly evolving landscape of AI, continued learning is imperative. With knowledge, foresight and wisdom, its benefits can be realized while avoiding pitfalls. The future of AI in law will be defined by those proactive enough to master its responsible application.